#BrainUp Daily Tech News – (Sunday, February 22ⁿᵈ)

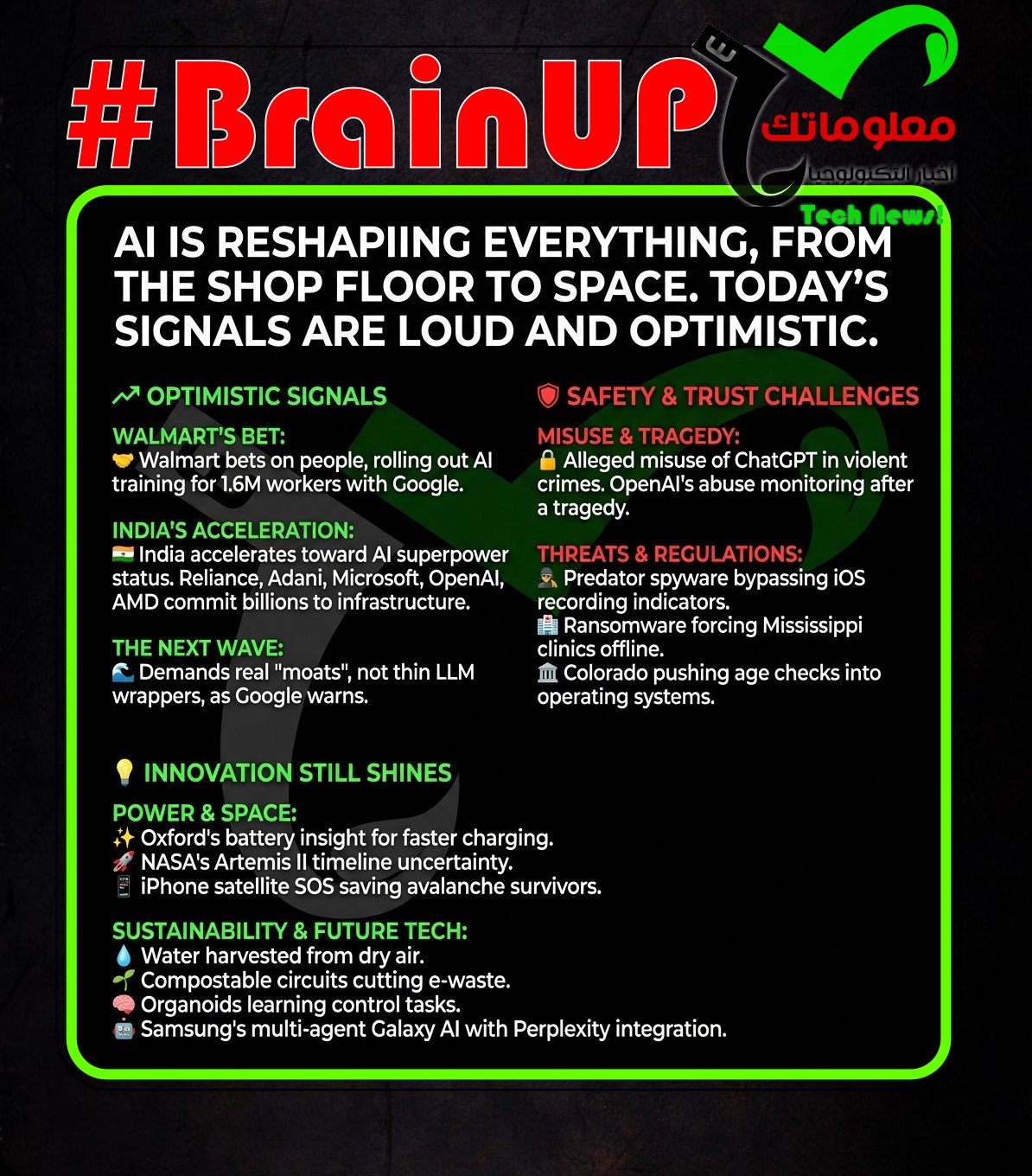

Welcome to today’s curated collection of interesting links and insights for 2026/02/22. Our Hand-picked, AI-optimized system has processed and summarized 21 articles from all over the internet to bring you the latest technology news.

As previously aired🔴LIVE on Clubhouse, Chatter Social, Instagram, Twitch, X, YouTube, and TikTok.

Also available as a #Podcast on Apple 📻, Spotify🛜, Anghami, and Amazon🎧 or anywhere else you listen to podcasts.

Walmart says it is not using #AI as a rationale to cut headcount, instead it is investing in upskilling its existing workforce through a partnership with #Google. The company will give its 1.6 million U.S. and Canada frontline and corporate employees free access to an eight-hour fundamentals course tied to Google’s new #AI Professional Certification, covering core concepts and applications such as research, app building, and communication, similar to programs offered by employers like @Verizon, Colgate-Palmolive, and Deloitte. The move responds to Google and Ipsos research showing a skills gap: 40% of U.S. workers report using AI at work, only 5% are “AI fluent,” and AI-fluent workers were 4.5 times as likely to have received higher wages. Walmart chief people officer @Donna Morris called it “unfortunate” when companies use AI to replace workers, framing the training as both a responsibility and a retention strategy that can help associates move into higher-paying leadership or corporate roles, while Walmart leaders including former CEO @Doug McMillon and current top leader @John Furner argue AI will change every job but not necessarily eliminate them, with staffing expected to remain roughly stable over the next several years.

2. Woman accused of using ChatGPT to plan drug murders

South Korean police say a 21-year-old woman, identified only as Kim, has been charged with murdering two men after investigators found she repeatedly consulted #ChatGPT about whether mixing sleeping pills with alcohol could be dangerous or fatal. Phone analysis showed she asked questions such as what happens when sedatives are taken with alcohol, how many pills make it dangerous, and whether it could kill someone, while police allege she secretly put prescribed benzodiazepine sedatives into victims’ drinks. Authorities say two men in their 20s died after visiting motels with her on 28 January and 9 February in Seoul’s Gangbuk-gu, and a third man was left unconscious, with police stating she was fully aware the combination could result in death despite her claim she did not know they would die. Investigators also allege an earlier attempt in December, when she drugged a then-partner in a cafe parking lot in Namyangju, after which she purportedly increased the dosage in later incidents. Police say they will continue investigating whether there are additional victims beyond the three identified.

3. Oxford breakthrough could make lithium-ion batteries charge faster and last much longer

University of Oxford researchers developed a patent-pending staining method to make hard-to-detect polymer binders inside #lithium-ion battery anodes visible, enabling better manufacturing control to boost charging performance and lifespan. They tagged cellulose- and latex-based binders in graphite- and silicon-based anodes with traceable silver and bromine markers, then mapped their nanoscale distribution using electron microscopy signals from #energy-dispersive X-ray spectroscopy and #energy-selective backscattered electron imaging. The work shows that even though binders are less than 5% of electrode mass, their spatial distribution strongly affects mechanical strength, electrical and ionic conductivity, and durability across repeated charge cycles. Using insights from these maps, small manufacturing adjustments reduced internal resistance by up to 40%, a change that could support faster charging and longer-lasting batteries. By revealing where binders sit and how they influence conductivity and structural stability, the technique is positioned to improve both current battery production and next-generation electrode designs.

4. Tech giants commit billions to Indian AI as New Delhi pushes for superpower status

At the India AI Impact Summit in New Delhi, major tech firms and investors announced large commitments to expand India’s #AI infrastructure and partnerships as the country pushes for tech superpower status. Reported plans include @Reliance investing $110 billion in data centers and related infrastructure, @Adani outlining a $100 billion AI data center buildout over the next decade, @Microsoft saying it is on pace to invest $50 billion in AI in the Global South by decade’s end, and @Blackstone participating in a $600 million equity raise for Indian AI infrastructure firm Neysa, while @OpenAI and @AMD announced partnerships with Tata Group. The event also highlighted India’s broader policy push, including approval of $18 billion of chip projects and the signing of the #PaxSilica agreement to secure the global supply chain for silicon-based technologies, alongside U.S. and India moving toward a trade pact. Attendance from leaders such as @SamAltman, @SundarPichai, @DarioAmodei, and @DemisHassabis underscored global interest, even as controversies emerged around @BillGates withdrawing and a university’s disputed robot dog claim. Despite booming public markets, an analyst said private #venture capital and #private equity for Indian AI entrepreneurs remains limited, while @Microsoft’s Brad Smith argued India could still become a place where models are developed, especially in domain-specific areas.

5. Sam Altman would like remind you that humans use a lot of energy, too | TechCrunch

@Sam Altman addressed concerns about #AI environmental impact at an event hosted by The Indian Express, arguing that claims about #ChatGPT water use are “totally fake” and rooted in outdated practices like evaporative cooling in data centers. He said it is fair to worry about total #energy consumption as AI use grows worldwide, and suggested the world needs to move quickly toward #nuclear, #wind, and #solar power. With no legal requirement for tech companies to disclose energy and water usage, scientists have tried to study the issue independently, and data centers have been linked to rising electricity prices. Altman disputed comparisons like a query equaling 1.5 iPhone battery charges and called much of the debate unfair because it focuses on training costs rather than the energy of answering a question after the model is trained. He argued the relevant comparison is AI inference versus a human answering the same question, noting that “it also takes a lot of energy to train a human” and claiming AI has probably already caught up in energy efficiency by that measure.

6. Google VP warns that two types of AI startups may not survive | TechCrunch

As the generative AI boom matures, @Darren Mowry, who leads Google’s global startup organization across Cloud, DeepMind, and Alphabet, warns that startups built as #LLM wrappers or #AI aggregators may struggle to survive because margins are shrinking and differentiation is thin. He describes these models as having a “check engine light” on, arguing that simply putting a UX on top of Claude, GPT, or Gemini, or “white-labeling” a model, no longer earns market patience without defensible moats. Mowry says startups need “deep, wide moats” that are horizontally differentiated or tightly tailored to a vertical market, pointing to Cursor and Harvey AI as examples of wrappers with deeper value. For aggregators that route queries across multiple models and offer orchestration features such as monitoring, governance, or eval tooling, he advises new founders to avoid the category, saying users increasingly expect built-in intellectual property that reliably chooses the right model for their needs, rather than relying on access or compute constraints. Overall, he argues that what worked in mid-2024, like slapping a UI on a GPT and gaining traction during the ChatGPT store moment, is giving way to a need for sustainable product value and clearer differentiation.

Even as tech leaders helped create a more screen-focused world, several prominent billionaires publicly say they tightly restrict their own children’s access to devices and #social media. @Steve Jobs said in 2010 his kids had never used an iPad and that technology use was limited at home, while @Steve Chen warned that #short-form video can drive shorter attention spans and suggested limiting kids to videos longer than 15 minutes. At the 2024 Aspen Ideas Festival, @Peter Thiel said his two young children get only 1.5 hours of screens per week, and other executives have described similar rules: @Bill Gates delayed smartphones until age 14 and banned phones at dinner, @Evan Spiegel said he limits his child to 1.5 hours weekly, and @Elon Musk said it might have been a mistake not to set social media rules for his children. The article links these practices to broader concerns, citing U.S. teens’ average of 7.5 hours of daily screen use and a 2025 study of nearly 100,000 people associating short-form video use with poorer cognition and declines in mental health. As public backlash grows, with countries like Australia and Malaysia banning under-16 social media use and others considering similar laws, the leaders’ strict home limits underscore rising scrutiny of platforms’ effects on minors and ongoing legal and political pressure on companies like #Meta.

8. OpenAI employees raised alarm about mass shooting suspect months ago: report

@OpenAI said it flagged the @ChatGPT account of Jesse Van Rootselaar in June through abuse detection for the #furtherance of violent activities and considered alerting the Royal Canadian Mounted Police, but decided the case did not meet its threshold for referral. The company said its standard for contacting law enforcement is an imminent and credible risk of serious physical harm, and it did not identify credible or imminent planning, so it banned the account for violating its usage policy. After the school shooting, @OpenAI said employees proactively contacted the RCMP with information about the suspect and her use of ChatGPT and pledged continued support for the investigation. Police said Van Rootselaar, 18, is suspected of killing eight people in a remote part of British Columbia before killing herself, and that she first killed her mother and stepbrother at home before attacking a nearby school. The motive remains unclear, and the attack was described as Canada’s deadliest rampage since 2020.

9. Tumbler Ridge School Shooter’s ChatGPT Account Flagged and Banned by OpenAI

After the 2024 school shooting in Tumbler Ridge, British Columbia, authorities and #AI safety teams discovered that the shooter’s ChatGPT account had been flagged for generating violent and harmful content prior to the attack but was not banned until after the incident when OpenAI took action, raising serious questions about how #OpenAI moderation systems detect clear warning signs of violence and prevent misuse. The article explains that police shared information with OpenAI about troubling prompts, leading to a ban that came too late to influence prevention. Observers and digital safety experts argue this case highlights a gap in current automated moderation where harmful ideation may be recognized only retrospectively rather than proactively, stressing that human review and real time threat escalation protocols are essential for effectively curbing dangerous intent expressed on AI platforms. Officials emphasize the complexity of balancing user privacy with public safety but acknowledge the need for better coordination between law enforcement and AI companies to ensure early detection of truly harmful behavior. The story underscores ongoing debates about #AI responsibility, content moderation efficacy and how tech platforms handle predictive signals of real world violence.

10. How Predator Spyware Defeats iOS Recording Indicators

Jamf Threat Labs documents how the commercial #Predator spyware (Intellexa/Cytrox) can suppress iOS camera and microphone recording indicators introduced in iOS 14, enabling covert surveillance without the user seeing the green or orange status bar dots. The technique requires a device that is already fully compromised, including kernel-level access to install hooks and inject code into system processes, and the research does not disclose new iOS vulnerabilities or exploitation methods. Reverse engineering of Predator iOS samples describes previously undocumented mechanisms such as using Objective-C nil messaging to suppress sensor activity updates, a single hook that disables both camera and microphone indicators, targeted iOS private framework APIs, and an operational gap where VoIP recording lacks built-in stealth. The approach differs from the earlier #NoReboot technique described by @ZecOps by keeping the device functioning normally while selectively removing only the visual warnings managed by SpringBoard. This analysis is intended to help defenders build detection capabilities by understanding Predator’s post-compromise behavior and its HiddenDot indicator suppression component.

11. Colorado moves age checks from websites to operating systems | Biometric Update

Colorado Senate Bill 26-051 proposes shifting #ageVerification enforcement away from individual websites and toward mobile operating systems and app store ecosystems to limit minors’ access to harmful content. The bill would require operating system providers to collect date of birth or age information at account creation, generate an age bracket signal, and expose that signal to developers via an API when apps are downloaded or accessed through covered app stores, with app developers required to request and use the signal. This approach is framed as a response to earlier Colorado efforts that struggled with child safety, privacy, feasibility, and constitutional limits, including SB 25-201, which targeted certain websites but raised #FirstAmendment and security concerns, and SB 25-086, which was vetoed by Governor @Jared Polis over feasibility and constitutional exposure. By embedding age attestation at the OS account layer, the proposal aims to avoid mandating that every website perform its own checks, while still creating a standardized mechanism for age gating through centralized platforms. The move reflects Colorado’s ongoing attempt to balance protecting children online with privacy and enforceability constraints by relocating responsibility to the infrastructure that mediates app access.

12. Mississippi health system shuts down clinics statewide after ransomware attack

The University of Mississippi Medical Center closed all 35 of its clinics statewide after a #ransomware attack disrupted patient care by affecting its phone and electronic systems. Officials said the attack began Thursday and compromised key systems including the Epic #electronic health records platform and the medical center’s IT network, prompting UMMC to take all systems offline until they can be tested and confirmed safe. UMMC hospitals and emergency departments remained operational, but clinic services were halted and appointments including chemotherapy and elective procedures were canceled as of Friday, with staff using paper documentation to continue ongoing care. UMMC vice chancellor @LouAnn Woodward said the scope of the intrusion and whether patient information was compromised were still unclear, and that UMMC was working with law enforcement including the @FBI and cybersecurity specialists. @Robert Eikhoff said the FBI is surging resources to help restore systems and patient care as the investigation continues.

13. iPhone’s Emergency SOS via Satellite Feature Helped Rescue Skiers Caught in Lake Tahoe Avalanche

Six skiers who survived an avalanche near Lake Tahoe used #Emergency SOS via Satellite on an iPhone to contact rescuers when they lacked cellular or Wi-Fi service, according to The New York Times. The skiers maintained text communication with the Nevada County Sheriff’s Office for several hours while rescue efforts were coordinated, with Don O’Keefe of California’s Office of Emergency Services describing a four-hour exchange between personnel and a guide. The incident highlights how #satellite-based texting can keep people connected during off-grid emergencies and support real-time coordination with law enforcement. The feature is available on iPhone 14 and later, and on the Apple Watch Ultra 3, and Apple provides it for free. The article notes the feature has aided other emergencies like car accidents and wildfires, and it works across numerous countries including the U.S. and U.K.

14. Nascent tech, real fear: how AI anxiety is upending career ambitions

Fear that #AI and #generativeAI will erode entry-level white-collar work is already changing what people study and the careers they pursue, even before large-scale automation fully arrives. Computer science student Matthew Ramirez switched from programming to nursing after tech layoff headlines and an unanswered datacenter technician interview reinforced his belief that coding will be easier to automate than healthcare. The article cites the @World Economic Forum projection that AI could displace 92m roles worldwide by 2030, and notes that US employers listed AI in nearly 55,000 job cuts in 2025, while ADP data showed losses in professional and business services and information services in December 2025 alongside growth in healthcare, education, and hospitality. Because many affected white-collar jobs involve writing, data analysis, and coding, workers are increasingly drawn to people-facing and hands-on roles, with Zety’s Dr Jasmine Escalera pointing to research that 43% of AI-anxious #GenZ are moving away from entry-level corporate and administrative jobs and 53% are considering blue-collar or skilled trades. The result is a labor market being reshaped by anticipation and uncertainty about AI’s capabilities, with some workers avoiding exposure and others adjusting their paths to emphasize “human skills” that appear harder to automate.

15. Theft of Trade Secrets Is on the Rise and AI Is Making It Worse

Corporate insiders and cybersecurity investigators report a spike in the theft of trade secrets that is being exacerbated by #AI tools which make it easier to extract, organize and transfer proprietary information with minimal technical skill, as employees or bad actors can use AI to search vast internal archives for sensitive formulas, plans and designs while evading detection through obfuscation techniques. Companies are increasingly worried that automated tools can reconstruct product blueprints or algorithmic assets from fragmented data stolen over time, and that AI accelerates the speed and scale of exfiltration, making traditional security controls less effective. Legal experts say firms are responding by tightening access controls, deploying AI based monitoring to detect anomalous data behavior and pursuing civil litigation after leaks surface, yet they acknowledge the challenge of keeping pace with generative capabilities that can reassemble complex intellectual property. The trend reflects how emerging technology introduces new attack surfaces for corporate espionage, pushing boards and CISOs to rethink governance, employee monitoring and ethical AI use policies to protect innovation and competitive advantage.

16. FCC chair wants the Pledge of Allegiance and national anthem on the airwaves every day

@Brendan Carr, the @FCC chair appointed by President Trump, announced the #Pledge America Campaign urging broadcasters to air “patriotic, pro-America programming” tied to America’s 250th birthday, including a suggestion to start each broadcast day with the #PledgeOfAllegiance or the national anthem, “The Star Spangled Banner.” He also encouraged stations to run PSAs, civic education segments, “Today in American History” announcements, features on local and national historic sites like #NationalParkService locations, and music by composers such as John Philip Sousa, Aaron Copland, Duke Ellington, and George Gershwin. Carr framed this as a voluntary effort aligned with broadcasters’ public interest mandate, and the announcement emphasized participation is not required but stations may choose to signal their commitment. The article notes that similar patriotic sign-offs once occurred on U.S. networks but faded with 24-hour broadcasting, and it situates the campaign amid Carr’s recent clashes with network programming, including calls involving @JimmyKimmelLive and FCC guidance affecting political equal-time expectations for talk shows. Overall, the campaign seeks to steer daily broadcast content toward a unified, civics-focused celebration as the 250th anniversary approaches.

17. AI Money and the Midterms Spotlight OpenAI and Anthropic Influence

In the lead up to the 2026 U.S. midterm elections, political campaigns and advocacy groups are investing heavily in #GenerativeAI tools from @OpenAI and @Anthropic to craft messaging, target voters and analyze trends, prompting scrutiny from election law experts who warn that the scale of AI driven campaign spending and influence operations could reshape traditional political communication and pressure regulators to clarify how AI contributions and expenditures must be reported under campaign finance rules. The article describes how parties and outside groups use AI to produce personalized outreach at unprecedented speed, generate rapid response content and optimize advertising strategies, intensifying competition for voter attention while raising concerns about misinformation amplification, lack of transparency and the potential to exploit voter profiles without clear disclosure. Lawmakers and ethics advocates argue that existing political advertising rules were not designed for AI generated creative and automated deployment, leading to calls for updated guidelines that ensure accountability and prevent covert manipulation. The phenomenon highlights emerging intersections between technology, democracy and governance as generative systems become central to political strategy and public discourse.

18. Across the US, People Are Dismantling and Destroying Flock Surveillance Cameras

Across multiple states, citizens opposed to warrantless vehicle surveillance have been intentionally smashing and dismantling #Flock automatic license plate reader cameras, particularly in cities like La Mesa, Eugene, Greenview and Lisbon, driven by anger that the devices collect extensive identifying data without warrants and share it with law enforcement agencies including ICE in ways critics call Orwellian and unconstitutional under the Fourth Amendment. The backlash follows public protests against municipal contracts with Flock, with some communities such as Santa Cruz and Eugene successfully cancelling agreements, while activists in other regions have taken matters into their own hands by physically removing camera poles and destroying hardware to stop the collection of movement data that can be used to track individuals and vehicles without judicial oversight. In Virginia, a man admitted to cutting down a series of Flock cameras and faced charges, then turned to a GoFundMe campaign and online support that frames his actions as defending privacy rights, reflecting widespread grassroots frustration with pervasive surveillance technologies. The rising resistance includes local news reports, viral social media support and campaigns like DeFlock that map rejections of ALPR contracts, illustrating a broader civil pushback against surveillance and data sharing that many see as encroaching on civil liberties and privacy protections.

19. NASA’s Artemis II lunar mission may not launch in March after all

#NASA says the #ArtemisII lunar mission may miss all March launch opportunities because of a rocket systems issue discovered at the pad. Technicians observed an “interrupted flow” of helium to the rocket system, prompting the agency to consider rolling the rocket and #Orion spacecraft back to the Vehicle Assembly Building for additional tests, which would eliminate five potential March dates and shift attempts to six windows in April. NASA says it is actively reviewing data and does not yet know why the helium flow was interrupted, and it is also looking back at the uncrewed Artemis I mission in 2022 when teams had to troubleshoot helium-related upper stage pressurization before launch. The potential delay comes a day after managers were optimistic following a second wet dress rehearsal, even as earlier fueling tests had revealed issues such as a liquid hydrogen leak and a separate dress rehearsal saw a ground communications loss that required backup systems. If the rollback occurs, the schedule for the 10-day crewed mission intended to return humans to the moon’s vicinity for the first time since Apollo 17 in 1972 would likely move from March to April.

20. India’s AI Data Centre Boom Raises Energy and Water Challenges

India’s rapid expansion of #AI-ready data centres — driven by government support and billions in private investment — is creating a stark tension between economic ambitions and environmental pressures in a country already facing water scarcity and rising electricity demand, with experts warning that the thirst for power and cooling resources could worsen local shortages and complicate climate commitments. As installed data centre capacity is projected to climb sharply by 2030, both electricity usage and water consumption for cooling are expected to more than double, requiring careful planning to manage grid loads and local water resources amid India’s net-zero by 2070 goals. India’s urban water stress is significant — the nation holds roughly 18 percent of the world’s population but only about 4 percent of its freshwater — and large facilities that rely on evaporative cooling systems can consume huge volumes of water daily, raising concerns in regions like Mumbai, Chennai and Hyderabad where infrastructure is already strained; experts argue that without efficient cooling technologies, recycled water use and transparent reporting on environmental footprints, the growth of AI infrastructure could deepen competition over finite water supplies. The energy-water nexus is now a central theme at forums like the India AI Impact Summit, where policymakers and technologists emphasise that balancing digital innovation with sustainability will require integrated planning, renewable integration, and regulatory frameworks to ensure that AI-driven data centre growth does not undermine community access to vital resources.

21. Fake Faces Generated by AI Are Now Nearly as Good as True Faces, Researchers Find

Researchers have found that #AI generated faces are reaching a level of realism where they are nearly indistinguishable from real human faces in both visual quality and statistical detection tests, meaning synthetic face images from advanced generative models can fool state-of-the-art detectors and challenge forensic tools designed to spot manipulations. The study used a variety of modern generative systems to produce face portraits and then tested detection algorithms against these outputs, revealing that improvements in training methods and model architectures have significantly closed the gap between synthetic and real images, with some fake faces scoring higher on realism metrics than older real face datasets. This raises concerns among security and privacy experts because indistinguishable deepfake faces could be used to create highly convincing fake identities for social engineering, evading biometric systems, or powering deceptive profiles on #SocialMedia platforms without easy ways to verify authenticity. Researchers caution that conventional detection strategies must evolve alongside generative capabilities, with some suggesting hybrid approaches that combine model provenance signals, temporal consistency checks and hardware fingerprinting to reliably flag synthetic identities. The trend underscores how generative AI advancements, while enabling creative and expressive applications, also intensify risks around misinformation, identity fraud and trust in digital interactions where visual authenticity was once taken for granted.

22. ‘Reimagining matter’: Nobel laureate invents machine that harvests water from dry air

@Omar Yaghi, a 2025 Nobel prize-winning chemist, has developed an environmentally friendly #atmospheric water-harvesting machine intended to provide clean water when central supplies fail during hurricanes or drought. Using #reticular chemistry to create molecularly engineered materials, the container-sized units can extract moisture from air even in arid conditions and, powered entirely by ultra-low-grade ambient thermal energy, generate up to 1,000 litres of clean water daily. Yaghi and his company #Atoco argue the technology could improve water resilience for vulnerable Caribbean islands and help reach marooned communities after storms such as hurricanes Beryl and Melissa. He also positions it as a climate-friendly alternative to #desalination, which can threaten ecosystems when concentrated brine is discharged into the ocean. With a UN warning of a “global water bankruptcy era” and billions lacking safe water or facing periodic scarcity, officials in places like Carriacou and Petite Martinique say off-grid operation using ambient energy is particularly compelling as they cope with storm damage, longer dry seasons, and reliance on imported water.

23. Tesla U.S. Sales Fall for Fourth Straight Month in January

@Tesla’s hold on the U.S. electric vehicle market is weakening as new registration estimates from Motor Intelligence show the company sold about 40,100 vehicles in January 2026, down roughly 17 percent year over year and marking its fourth consecutive monthly decline, with 2025 full year registrations estimated at 568,000 units, about 10 percent lower than 2024. The downturn coincides with the expiration of the $7,500 federal EV tax credit in late 2025, effectively raising prices across the #EV segment and contributing to a broader slowdown in demand, with overall January EV sales estimated to be nearly 30 percent lower than a year earlier and a Deloitte survey suggesting only 7 percent of U.S. buyers plan to choose an EV next. Although the Model Y remains a standout, continuing as California’s best selling vehicle and outperforming models like the Toyota RAV4, Tesla’s statewide sales still slipped from 202,865 units in 2024 to 179,656 in 2025, signaling erosion even in strongholds. Competitive pressure is intensifying globally as #BYD surpasses Tesla in worldwide EV sales, while Tesla has discontinued the Model S and Model X amid weaker demand, reinforcing concerns that January’s decline reflects structural challenges rather than a temporary dip. Combined with pricing pressures, cooling consumer appetite and intensifying competition, the figures suggest that Tesla’s once dominant position in the U.S. EV market is facing sustained headwinds rather than a brief correction.

24. Scientists Grew Mini Brains, Then Trained Them to Solve an Engineering Problem

Lab-grown cortical organoids provided a proof of concept that living neural circuits can be adaptively tuned with structured, closed-loop electrical feedback to improve performance on an unstable control task. In a virtual #cartpole setup, patterns of stimulation encoded the direction and degree of the pole’s tilt, and the organoids’ electrical responses were decoded as left or right forces moving the cart to keep the pole upright, with performance measured by how long each episode lasted before failure. Mouse stem cell derived organoids were tested under three conditions: no feedback, random feedback to selected neurons, or adaptive feedback based on past performance, with the adaptive condition emphasized as key. The work does not suggest a hybrid biocomputer or organoid sentience, but it indicates that neural tissue in a dish can change its internal connections in response to feedback in a way that supports better control. @Ash Robbins and colleagues argue this approach could help study the fundamentals of neuronal tuning and provide a tool for probing how neurological disease affects learning related plasticity.

Researchers at the University of Glasgow have developed almost entirely biodegradable #printed circuit boards that could reduce the environmental harms of #e-waste. Using a “growth and transfer additive manufacturing process,” they print zinc-based conductive tracks, as narrow as five microns, onto biodegradable substrates such as paper and bioplastics, enabling 99% of the materials to be safely disposed of via soil composting or dissolved in common chemicals like vinegar. The circuits performed comparably to conventional copper-based boards and were successfully tested in devices including tactile sensors, LED counters, and temperature sensors, with stability maintained after more than a year in ambient conditions. A #life cycle assessment found the biodegradable PCBs could cut global warming potential by 79% and resource depletion by 90% versus conventional boards, supporting a move toward #circular electronics. @Dr Jonathon Harwell said the approach could have far-reaching impacts for consumer electronics, #internet-of-things devices, and disposable sensors.

26. The Pixel 10a will finally end the Tensor debate

The Pixel 10a using the #Tensor G4 is framed as Google’s chance to quiet years of criticism about Tensor performance and overheating, while keeping costs in line instead of using a newer chip. The author argues much of the backlash stems from early #Tensor growing pains on Pixel 6 and 7, but says modern Pixels have improved, citing no undue overheating since #Tensor G2 and strong battery life and thermals reported on Pixel 8 Pro with #Tensor G3, plus the author’s own positive Pixel 9a battery life. Because the G4 is a known quantity, Google has had a year to refine #software optimization, core scheduling, and thermals, which should make the Pixel 10a smooth, efficient, and potentially the best-launching G4 device, with improved image processing as well. With Google promising 7 years of support, the Pixel 10a is positioned to demonstrate that Tensor is designed for longevity, not benchmark dominance, and can sustain Android upgrades and new #Gemini features over time. If performance is solid from launch and remains reliable through updates, it would support the claim that Google can keep older Tensor hardware feeling good with software and long-term support.

27. Galaxy AI turns into a multi-agent ecosystem, adds deep integration with Perplexity AI

@Samsung is evolving #GalaxyAI into a #multi-agent ecosystem with OS-level integration so users can work with multiple AI agents seamlessly, without switching apps or repeating commands. The company cites internal research showing nearly 8 in 10 users regularly use more than two AI agents, and says system-level integration also provides more context for more natural interactions. The first newly integrated agent is @Perplexity, summonable via “Hey Plex” or by assigning the side button, and it will appear on upcoming flagship Galaxy devices. Plex will be deeply embedded in Samsung first-party apps like Gallery, Notes, Calendar, Clock, and Reminder, and will also work with certain third-party apps to handle multi-step workflows; more device and experience details are promised soon. Samsung frames Galaxy AI as an “orchestrator” for an open, integrated AI ecosystem, and notes it recently unveiled an upgraded #Bixby in #OneUI8.5 with natural-language system setting changes and real-time web searches.

28. Amazon Overtakes Walmart as World’s Largest Company by Sales

Amazon has officially overtaken @Walmart to become the world’s largest company by annual sales after posting about $717 billion in revenue for 2025 compared with Walmart’s roughly $713 billion, ending a 13-year streak in which the Arkansas-based retailer led global sales and reflecting Amazon’s evolution from online marketplace into a diversified technology and commerce powerhouse driven by strong performance not just in retail but also in cloud computing via #AWS, advertising and subscription services; this milestone illustrates how digital transformation and the growth of #Ecommerce and cloud infrastructure have reshaped traditional retail hierarchies even as Walmart’s physical stores and expanding online efforts continue to register solid growth.

29. Court Having Trouble Assembling Jury for Elon Musk Because People Hate Him So Much

In a San Francisco federal court case accusing @Elon Musk of manipulating Twitter’s stock, jury selection has been difficult because many prospective jurors say they strongly dislike him. Judge Charles R. Breyer questioned more than 90 potential jurors about whether they could judge solely on the evidence and law, and over a third said they could not be impartial and were dismissed, while others admitted negative views but claimed they could set them aside. Musk’s attorney Stephen Broome argued it was improper to seat jurors who said things like they hated Musk or thought he lacked a moral compass, but Breyer responded that public figures naturally “excite” strong opinions and the key issue is whether jurors can put those aside. The dispute over impartiality sits alongside allegations that Musk made misleading statements during his $44 billion bid for Twitter, later renamed X, including tweets suggesting the deal was “on hold” over #spam and #bot account estimates, after which Twitter’s stock fell nine percent. The court must decide whether jurors can separate their views about Musk, including reactions to his ties to the @Trump administration and #DOGE, from the evidence about his conduct in the Twitter deal.

30. Reported DJI RoboVac Bug Exposed Thousands of Live Home Feeds

A security flaw in the #DJI RoboVac home cleaning robot allowed unauthorized individuals to access live camera feeds from thousands of devices, exposing private interior views without owner consent and illustrating how network connected smart appliances with integrated #AI navigation and remote video capabilities can present significant privacy risks when backend authentication or encryption protections are weak. Users discovered random feeds appearing in their app interfaces, leading to public reporting and swift warnings from security researchers who stress that internet facing IoT products must enforce strict session validation and access controls to prevent cross-device exposure. DJI responded by temporarily disabling certain remote access features and rolling out firmware updates aimed at patching the vulnerability, while advising owners to update devices immediately and reset networks to mitigate ongoing risk. The incident underscores broader concerns about the security posture of consumer robotics and smart home ecosystems where convenience and connectivity often outpace robust privacy safeguards, prompting calls for industry standards that enforce secure design principles and protect users from live surveillance exposures.

That’s all for today’s digest for 2026/02/22! We picked, and processed 21 Articles. Stay tuned for tomorrow’s collection of insights and discoveries.

Thanks, Patricia Zougheib and Dr Badawi, for curating the links

See you in the next one! 🚀