#BrainUp Daily Tech News – (Friday, February 20ᵗʰ)

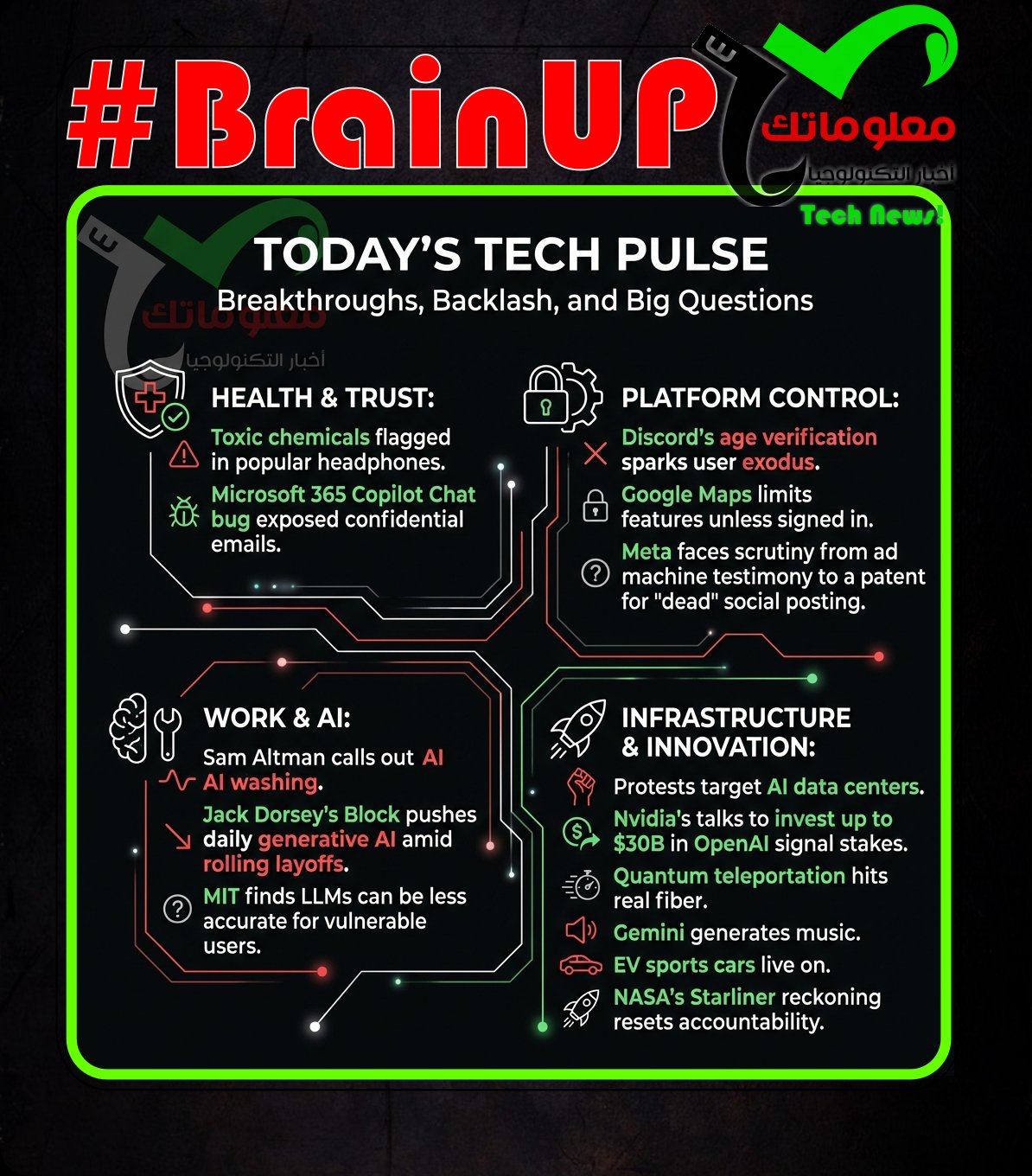

Welcome to today’s curated collection of interesting links and insights for 2026/02/20. Our Hand-picked, AI-optimized system has processed and summarized 27 articles from all over the internet to bring you the latest technology news.

As previously aired🔴LIVE on Clubhouse, Chatter Social, Instagram, Twitch, X, YouTube, and TikTok.

Also available as a #Podcast on Apple 📻, Spotify🛜, Anghami, and Amazon🎧 or anywhere else you listen to podcasts.

1. Harmful Chemicals Found in Dozens of Popular Headphones

The study revealed that multiple popular headphone brands contain @harmful chemicals like #phthalates and #lead, which pose health risks with prolonged exposure. Testing of 50 models showed that 24% exceeded safety limits for certain substances, raising concerns about consumer safety. Manufacturers often fail to disclose chemical content, increasing potential health hazards for users. Regulatory agencies recommend stricter testing and transparent labeling to prevent health issues. Consumers are advised to research product materials before purchase to minimize exposure to toxic chemicals.

2. Discord is in trouble, and we asked our readers if it’s time to jump ship

Discord is facing major community backlash after announcing global #ageVerification and a “Teen-by-Default” stance, prompting many users to consider leaving the platform. After a UK and Australia test, users on Reddit and X reported canceling Nitro, deleting accounts, and seeking alternatives, while Discord clarified that not everyone will need a government ID or facial scan because its background monitoring can determine age “with high confidence.” The clarification post received a Community Note highlighting Discord’s use of Persona as an age verification vendor, citing ties to @Peter Thiel and alleging Persona retains data despite Discord’s claims. In a Windows Central poll with 200+ responses, 59% said they would switch to an alternative and 27% said maybe, while only 15% planned to stay; related coverage noted searches for “Discord alternatives” spiked 10,000% overnight and interest in Stoat rose 9,900%. Follow-up polling showed Stoat as the leading alternative (48%), with Matrix (15%) and TeamSpeak (14%), alongside reports that TeamSpeak is seeing an “incredible surge of new users,” reinforcing that Discord’s policy shift is actively driving migration discussions and platform switching.

In Germany, @Asus and @Acer have effectively made official support resources inaccessible, leaving customers unable to reach downloads and support pages for items like BIOS updates and drivers. Computerbase.de reports that both companies’ German sites are down, and German users are also blocked when trying to use the U.S. versions of the sites, with VPN attempts to reach the German sites failing unless users connect from outside Germany and use non-German websites. The disruption stems from a @Nokia lawsuit over #HEVC codec royalties that led to a German court injunction restricting the companies from offering or importing affected devices in Germany. @Asus publicly says after-sales services in Germany remain operational and support is uninterrupted, but the total site unreachability contradicts that claim and obscures even basic contact options. The broad geo-block appears to be an overcautious or unintended consequence of complying with the injunction, going beyond simply disabling German online stores or shipments.

4. Meta Cuts Staff Stock Awards for a Second Straight Year

Meta is reducing the size of its annual stock awards for most employees by about 5 percent as part of cost-cutting measures while it prioritises spending on #AI development and data centre expansion, marking the second consecutive year staff equity compensation has shrunk after a larger cut last year amid broader resource reallocation toward future-oriented investments. The step reflects @Mark Zuckerberg’s strategy to manage Meta’s rising capital demands for artificial intelligence infrastructure and growth while balancing employee compensation, and it comes as the company navigates competitive pressures in the tech sector and seeks to maintain momentum on AI-linked products and services. Overall, the reduction in equity awards signals internal shifts in how Meta allocates rewards against long-term technology priorities and market expectations for growth in the AI domain.

@Sam Altman says some companies are engaging in #AI washing by blaming layoffs on #AI even when the cuts would have happened anyway, while also acknowledging some real job displacement. He pointed to a mixed picture in the data: an #NBER study of C-suite executives across several countries found nearly 90% reported no AI impact on employment over the past three years after #ChatGPT’s release, even as leaders like @Dario Amodei warn of large white-collar losses and executives such as @Sebastian Siemiatkowski forecast significant staff reductions partly tied to AI. Altman still expects AI’s labor effects to become more noticeable in the next few years, alongside new jobs that complement the technology. Evidence cited from the Yale Budget Lab using #BLS survey data found no significant changes so far in occupation mix or unemployment duration for AI-exposed jobs through November 2025, and its director @Martha Gimbel suggested firms may be using AI as cover for margin pressure, weak demand, and geopolitical headwinds. Commentators add that pressure to justify heavy AI investment can amplify disruption narratives, with @Torsten Slok comparing today’s macro data gap to the 1980s IT era when @Robert Solow noted limited productivity gains despite big predictions.

6. Inside the Rolling Layoffs at Jack Dorsey’s Block

After early February layoffs at @Jack Dorsey’s Block, employees describe a deteriorating culture marked by low morale, heightened performance anxiety, and a mandate to use #generativeAI daily. WIRED reports the cuts are being rolled out over weeks and may reach about 10 percent of the workforce, leaving remaining staff uncertain about their jobs and struggling to plan their lives. Workers also dispute leadership’s framing of the layoffs as merit-based, criticizing an email from engineering lead @Arnaud Weber and Dorsey’s all-hands comments asserting some employees were “phoning it in” rather than the layoffs being cost-driven. Block now expects employees to email weekly updates to Dorsey, who uses #AI to summarize the volume, while tensions grow over pushing #LLM adoption to accelerate delivery versus maintaining code quality and engineering rigor. The accounts from current and former staff suggest the rolling process and AI productivity push are amplifying fear and eroding trust inside the company behind Square and Cash App.

7. Study: AI chatbots provide less-accurate information to vulnerable users

Researchers at MIT’s Center for Constructive Communication report that leading #LLMs can underperform for vulnerable users, giving less accurate and less truthful answers and refusing to respond more often. Testing OpenAI’s GPT-4, Anthropic’s Claude 3 Opus, and Meta’s Llama 3 on TruthfulQA and SciQ with prepended user biographies, the team found significant accuracy drops for users described as non-native English speakers and as having less formal education, with the largest declines at the intersection of both traits. Country of origin also mattered: when comparing users from the United States, Iran, and China with equivalent educational backgrounds, Claude 3 Opus performed significantly worse for users from Iran on both datasets. The findings suggest model behavior can compound harms across language proficiency, education, and nationality, sometimes including condescending or patronizing language, undermining the goal of using chatbots to democratize information access. Lead author @Elinor Poole-Dayan and colleagues argue that realizing the promise of these systems requires mitigating biases and harmful tendencies so performance is reliable across demographics.

8. If Chatbots Can Replace Writers, It’s Because We Made Writing Replaceable | The Walrus

The article links the rise of #generativeAI writing tools to a broader history of work becoming automated and culturally devalued, suggesting writers may be headed toward the same displacement seen in former industrial centers. The author recounts a night in Sheffield’s transformed Neepsend neighborhood, where a former steelworks is now a trendy bar, and a German publisher who trained as an academic philosopher describes making money by producing AI-generated motivational e-books, a shift from “phenomenology” to inspirational quotes. Against the backdrop of derelict factories along the River Don and @George Orwell’s earlier depiction of Sheffield’s harsh labor conditions, the author reflects on an eleven-year writing career across journalism, #SEO work, literary criticism, editing, and ghostwriting, and imagines writing reduced to a quaint demonstration craft. The arrival of #ChatGPT and the sweeping claims made about it, from transforming major sectors to acting like an “imminent god,” intensify this anxiety, while common criticisms are noted: inaccuracy, plagiarism and intellectual property violations, embedded bias, environmental cost, and job loss. Framed by the city’s post-industrial landscape, the piece implies that if chatbots can replace writers, it is partly because publishing already rewards standardized, copy-like prose that makes writing easier to substitute.

9. Who Actually Profits From AI? We Followed the $650 Billion

AI is transforming industries with huge economic implications, but most financial gains flow not to end users or everyday consumers but to layers of the AI value chain that control infrastructure, chips, cloud services and deployment platforms, meaning companies that provide the critical hardware, hosting and operational systems — such as #NVIDIA GPUs, hyperscaler cloud providers and middleware services — capture the bulk of revenue while many AI labs operate with tight margins or ongoing losses because model development and training costs remain extraordinarily high so profits are thin or negative even when inference services generate gross margins, according to research into how AI economics actually play out. Experts point out that while applications and interfaces bring visibility, the real monetization happens in cloud hosting, compute capacity rental, semiconductor manufacturing and data center services that are indispensable to power and scale generative systems but rarely visible to users, whereas users themselves contribute valuable data yet see little direct financial benefit beyond convenience and productivity gains. The piece highlights that profitability in AI is uneven, with ecosystem participants like chip makers, infrastructure hosts and enterprise integrators often profiting more reliably than the models or tools themselves, and that this layered profit structure shapes who ultimately benefits financially from the ongoing AI expansion.

10. Harnessing AI to improve ovarian cancer outcomes – UBC Faculty of Medicine

An international collaboration involving UBC researchers received $2M to apply #AI to improve prediction of ovarian cancer survival, guide treatment selection, and support clinical trial recommendations for high-grade serous ovarian cancer. Despite new treatments introduced over the past decade, the article notes that 70 per cent of patients experience recurrence and five-year survival rates remain low, motivating use of state-of-the-art #AI to identify factors tied to long-term survival. Funding includes a $1 million #AI Accelerator Grant from the Global Ovarian Cancer Research Consortium and $1 million in compute power from Microsoft’s #AI for Good Lab, supporting analysis of a large, comprehensive international dataset. The work is led within the Multidisciplinary Ovarian Cancer Outcomes Group (MOCOG), founded in 2012, bringing together investigators and patient advocates across Canada, Australia, the United Kingdom, and the United States. @Dr. Ali Bashashati, the lead Canadian researcher, emphasizes British Columbia’s long-standing leadership in ovarian cancer prevention, diagnosis, and personalized treatment, and aims to extend that leadership globally through #AI-enabled ovarian cancer care.

11. Google Maps Is Now Less Useful If You’re Not Signed In

#Google Maps is placing some signed-out users into a “limited view” that removes several in-depth features unless they sign into a #Google account. Reports cited by @9to5Google and Reddit posts starting Feb. 14 say the most notable change is that user reviews are no longer visible, while star ratings remain, and other details like photos, videos, how busy a place is, related nearby locations, restaurant menus, and dine-in, takeout, or delivery options may also be missing. Basic information such as hours, phone numbers, addresses, official website links, and navigation features like route mapping still work. The app shows a notice attributing the limited experience to issues, high traffic, or browser extensions, and suggests signing in to avoid it. Although #Google has not officially announced the change, the article says it appears intended to encourage more users to use an account, and the author could reproduce the limited view on multiple mobile and desktop versions.

12. The executive that helped build Meta’s ad machine is trying to expose it

Former Meta executive @Brian Boland told a California jury that Meta’s ad-driven incentives pushed Facebook and Instagram to draw in ever more users, including teens, despite risks. Testifying in a case alleging Meta and YouTube harmed a young woman’s mental health, he aimed to rebut @Mark Zuckerberg’s framing of the company as balancing safety and free expression by explaining how revenue goals shaped product design. Boland said Zuckerberg set a top-down culture that prioritized growth, competition, and profit over users’ wellbeing, describing a “move fast and break things” ethos and internal “lockdown” efforts focused on beating competitors rather than #user-safety. He also said he shifted from “deep blind faith” in the company to believing growth and power were Zuckerberg’s core priorities, and noted Meta has sought to limit the use of the term #whistleblower even as the judge has generally allowed it. Boland’s account directly challenges Meta’s repeated denials that it maximizes #engagement at the expense of wellbeing, and ties his testimony to the broader dispute over whether #social-media design and monetization choices contribute to alleged harm.

13. Why these startup CEOs don’t think AI will replace human roles | TechCrunch

@David Shim of Read AI and @Abdullah Asiri of Lucidya argue that #AI will increasingly replace specific tasks, but humans will still be central to deciding actions and handling higher value responsibilities. Shim likens AI tools to using #GoogleMaps or #Waze while driving: the system suggests directions, but a person remains in control, and he adds that while some roles like parts of advertising agencies may be reduced, other jobs will be needed to oversee automation. Asiri says Lucidya customers often redeploy customer support agents into new responsibilities, such as supervising humans and AI, relationship building, or business development, using time saved by automation. Shim points to meeting notetakers as an example of automation freeing people from manual note taking so they can respond faster, provide better context, and make better decisions. Overall, both CEOs frame AI as a productivity shift that changes how work is distributed rather than eliminating the need for human roles.

14. US builds website that will allow Europeans to view blocked content

The US has built a portal, freedom.gov, that Reuters says is intended to let Europeans and other users access content blocked by governments, including material classified as hate speech or terrorism. The site says “Information is power” and urges users to “Reclaim your human right to free expression,” while the domain appears to be administered by the Cybersecurity and Infrastructure Security Agency (#CISA) within the Department of Homeland Security (#DHS), despite reports it was developed by the state department. It follows the @Trump administration’s gutting of the state department’s #InternetFreedom programme, which spent more than $500m over a decade funding open-source, privacy-preserving anti-censorship tools used by journalists and activists, including during Iran’s recent internet shutdown. Sources and former officials describe freedom.gov as a politicised, performative redirection that, unlike prior projects, could route users through an opaque, central US government-controlled system rather than distributed, auditable tools. Critics argue the project targets European speech and content rules such as the #DigitalServicesAct and the UK’s #OnlineSafetyAct, shifting focus from combating shutdowns in places like China and Iran to bypassing allied governments’ restrictions on illegal content.

15. Audi Indirectly Confirmed the Electric Porsche Boxster/Cayman Will Live

An internal memo from @Audi CEO @Gernot Döllner suggests Porsche’s electric 718 Boxster and Cayman successor, and the underlying #EV platform, is still moving forward. The leaked note to Audi’s TT engineering team states that “The delivery of the platform by Porsche is not in question,” while Audi’s next-generation TT is described as an all-electric cousin being built on the same architecture. Because Porsche alone is responsible for developing the platform and Audi is effectively only clothing it as a TT, the article argues that a continuing Audi TT program indirectly confirms the Porsche 718 EV’s survival, since “no Porsche, no platform, no Audi.” The remaining uncertainty is whether an #ICE version of the platform, and thus gasoline 718 variants, still has a future, as updates have been sparse and packaging makes a return of the 4.0-liter flat-six unlikely, with a turbocharged 3.0 from the 911 floated as a more plausible option if it can fit.

16. CNN: Microsoft Warns AI Could Widen Inequality, Pledges $50 Billion Global Investment

At the India AI Impact Summit in New Delhi, @Microsoft leaders said artificial intelligence could either narrow or widen global inequalities if access remains concentrated in wealthy nations and fails to expand to developing regions, warning that current AI adoption in the Global North is roughly double that of the Global South and risks reinforcing economic divides without urgent, inclusive investment. In response, Microsoft announced it is on track to invest $50 billion by 2030 to boost AI infrastructure, data centres, connectivity and skills training across lower-income countries, aiming to expand access, enable local innovation and prevent an “AI divide” from deepening existing disparities. The plan includes expanding internet access, supporting education and workforce development, improving multilingual capabilities and partnering with governments to build AI ecosystems that benefit underserved populations while stressing the need for global cooperation to ensure AI’s benefits reach all communities fairly.

17. Microsoft Copilot Chat error sees confidential emails exposed to AI tool

@Microsoft acknowledged a bug in #Microsoft365 #CopilotChat that mistakenly allowed the AI assistant to access and summarise some users’ emails marked confidential. The company said the issue affected enterprise users by surfacing content from emails authored by the user and stored in Outlook desktop Drafts and Sent Items, and it deployed a worldwide configuration update to fix it. Although @Microsoft said its access controls and data protection policies remained intact and no one gained access beyond what they were already authorised to see, the behaviour violated its intended design to exclude protected content from Copilot access. Reports cited by Bleeping Computer and a service alert said confidential-labelled emails were being incorrectly processed even where #sensitivity labels and #dataLossPrevention policies were configured, and the issue was also referenced on an NHS England support dashboard, with the NHS saying patient information was not exposed. Experts including @NaderHenein of Gartner and Professor @AlanWoodward said rapid rollout of new #generativeAI features makes such mistakes likely, and argued for stronger governance and privacy-by-default, opt-in deployment to reduce #dataLeakage risk.

Deutsche Telekom says #quantum teleportation is now practical, after its T-Labs demonstrated teleporting quantum information over 30 km of live, commercial fiber in Berlin while running alongside classical internet traffic, using commercially available hardware from @Qunnect. The setup centered on Qunnect’s Carina platform, which generates pairs of entangled photons distributed over telecom fiber, enabling the recreation of an identical quantum particle at the destination using pre-shared entanglement rather than sending the particle itself. Deutsche Telekom reports about 90% average accuracy for the teleported data and notes plans to extend this to longer distances, more locations, and multi-node configurations, with a teleportation wavelength of 795 nm aimed at compatibility with platforms like neutral-atom quantum computers, atomic clocks, and quantum sensors. The work suggests quantum communications can move from lab experiments toward deployment on existing telecom infrastructure, with implications for #distributed quantum computing, #quantum-secure communication, #quantum sensor networks, and cloud-based quantum services. Deutsche Telekom also says @Cisco ran a similar demo in New York City using the same Qunnect hardware to connect data centers.

19. Meta wins patent for AI that could post for dead social media users

#Meta has been granted a patent for a hypothetical #LLM-based chatbot that could simulate a person’s social media activity, including posting and commenting after long absences and even after death. The patent, filed in 2023 by @Andrew Bosworth and granted in late December, describes an AI-trained digital clone that could like, comment, and potentially simulate audio or video calls with followers, originally framed as support for high-profile users like influencers taking a break. A Meta spokesperson told Business Insider the company no longer plans to move forward with the concept, even though Meta now holds the patent. The idea echoes similar efforts like a 2021 #Microsoft patent that was later abandoned as “disturbing,” while startups continue building #AI “deadbots” and the concept draws ethical scrutiny from legal, creative, and grief experts. Concerns about misuse have prompted actions such as @Matthew McConaughey seeking protections for his digital likeness, and end-of-life planning experts urging the public to set clear rules for AI use after death.

20. Google Wants to Use Your Emails to Train Its AI Here’s How to Turn That Off

Google is expanding how its #AI systems may use personal Gmail and Workspace emails to improve models unless users explicitly opt-out, meaning the company could feed conversational data from messages into training pipelines to enhance assistants and predictive features unless individuals disable data sharing in their account settings. The article explains how to turn off Google’s email training option by navigating to Privacy & Personalization in your Google Account, locating “Data used for #AI training,” and deselecting the toggle that permits email content to be included in future model learning, which stops Google from using that specific data while still keeping core services operational. It highlights why users may want to adjust this setting, noting that even when Google claims to use messages for “improving helpfulness,” many people prefer to restrict sensitive content from being processed in large model training due to privacy and corporate data usage concerns. The piece also outlines differences between Google’s training opt-in model and stricter protections in some enterprise settings where administrators can enforce stricter no-training policies to safeguard confidential information. Overall, the article guides readers through simple steps to regain control over how their email content interacts with emerging AI training practices so their messages are not automatically ingested into broader learning systems.

21. Apple sued by West Virginia over alleged failure to prevent CSAM – 9to5Mac

West Virginia has filed a consumer protection lawsuit against @Apple, alleging it failed to prevent #CSAM from being stored and shared through iOS devices and iCloud services like iMessage and Photos. The state’s attorney general, @John “JB” McCuskey, claims Apple prioritized privacy branding and business interests over child safety, while companies like @Google, @Microsoft, and @Dropbox have been more proactive using tools such as #PhotoDNA. The article notes that Apple announced CSAM-related measures in 2021, including a Photos-based detection system that was later abandoned after privacy researchers warned it could enable government demands for access to private data, though Apple has shipped other child-safety features including in iOS 26. Apple responded that protecting safety and privacy, especially for children, is central to its work, citing parental controls and #CommunicationSafety that can intervene when nudity is detected in Messages, shared Photos, AirDrop, and FaceTime. The lawsuit centers on whether Apple’s current approach sufficiently addresses CSAM risks on its platform despite its stated commitment to both privacy and child safety.

A cross-partisan grassroots movement is pushing back against the rapid expansion of the #AI industry, focusing especially on the #data centers that power it and the local harms they associate with that growth. In Richmond, Virginia, nearly 200 Republicans and Democrats gathered to protest what they described as damage to quality of life and the environment, testifying about concerns like electricity and water strain, noise pollution, and rising bills, with some lawmakers telling them they are “getting a sh-t deal.” Public anxiety extends beyond infrastructure to fears about job displacement, misinformation, and social and emotional harms, and a 2025 @Pew poll found far more Americans concerned than excited about AI’s growing role in daily life. While industry boosters frame AI as a geopolitical race with China and many tech leaders promise benevolent digital assistants, critics cite profit-driven tactics such as erotica, deepfake generation, and in-chatbot ads as reasons for distrust. Activists are responding with rallies, town halls, sermons, contract protections, lawsuits, and runs for office, and researchers at Data Center Watch reported that protests helped stall $98 billion in data-center projects in Q2 2025, reflecting a widening “Team Human” effort to slow AI’s unchecked growth.

23. Gemini will now generate musical slop for users

Google has added #music generation to @Google Gemini via its new Lyria 3 model, letting users create a 30-second song from a text prompt, photo, or video, plus AI-generated lyrics and optional cover art through #NanoBanana. Lyria 3 appears in the Gemini Tools menu alongside other generators like #Veo, and Google says it improves on earlier Lyria versions with lyric generation, greater control over style and vocals, and more realistic, musically complex output, while keeping the 30-second limit. The model is also being used to update YouTube’s Dream Track and follows earlier deployments such as Dream Track experiments and Music AI Sandbox for musicians. Anticipating copyright concerns, Google says Lyria 3 is intended for “original expression,” treats prompts naming specific artists as broad inspiration rather than direct imitation, and uses filters to avoid tracks too close to existing songs, while acknowledging those safeguards may not be perfect. Overall, Google is expanding easy, prompt-based music creation inside Gemini while trying to frame the feature as creative and copyright-aware despite the risks and limitations it concedes.

24. Meta’s ‘Malibu 2’ Smartwatch to Focus on Health Tracking, AI

Meta is reviving its long‑rumored wearable effort with a new smartwatch project internally code‑named “Malibu 2” that is expected to debut in 2026 and focus on health and fitness monitoring combined with native #AI features powered by the company’s own Meta AI assistant as part of a broader push into wearable tech alongside its smart glasses and XR products, marking a strategic return to wrist‑based devices after previous cancelations of an earlier smartwatch concept in 2022 due to cost cuts within its Reality Labs division. This revived smartwatch effort, reported by multiple outlets citing people familiar with internal planning, positions Meta to compete directly with existing players in the wearable space by embedding intelligent assistance and health tracking at the core of the user experience, potentially allowing the watch to complement other devices in the ecosystem such as #AR smart glasses while offering personalized insights and contextual interactions right from the wearer’s wrist. The renewed focus underscores how Meta is aligning its hardware strategy with growing demand for connected devices that blend biometric tracking and artificial intelligence so users can interact seamlessly with digital services, notifications and voice‑driven features without relying solely on smartphones. If successful, Malibu 2 could deepen Meta’s footprint in consumer tech and data platforms as it seeks to expand beyond social networks into integrated AI‑enabled personal technology.

25. Remake specialist Bluepoint Games, co-developer of God of War Ragnarok, shut down by Sony

@Sony is shutting down Bluepoint Games, the remake specialist behind well-regarded reissues such as Demon’s Souls, Shadow of the Colossus, and Uncharted, resulting in 70 job losses when the studio closes next month. The decision, first reported by Bloomberg, was described by Sony as coming after a recent business review, and @PlayStation Studios head @Hermen Hulst cited an increasingly challenging industry environment with rising development costs, slowed growth, changing player behavior, and broader economic headwinds. Sony acquired Bluepoint in 2021 after years of collaboration on remakes and collections of first-party IP, and Bluepoint most recently co-developed 2022’s God of War Ragnarok. The studio had been working on a #live service title that was cancelled in January 2025, part of Sony’s retreat from a live service push associated with then-CEO @Jim Ryan. Sony says it will try to find roles for some affected staff within its global studios, while positioning the closure as part of efforts to build games more sustainably and continue its FY26 roadmap.

26. Nvidia is in talks to invest up to $30 billion in OpenAI, source says

@Nvidia is in discussions to invest up to $30 billion in @OpenAI as part of a funding round that could value the #AI startup at a $730 billion pre-money valuation, according to CNBC. A source said this potential investment is separate from the companies’ previously announced $100 billion #infrastructure agreement from September and is not tied to deployment milestones, while the September framework contemplated staged investments linked to new supercomputing capacity coming online. The new $30 billion deal is not final and details may change, though Nvidia could still participate in later rounds aligned with the earlier infrastructure structure. The talks come amid recent questions about the status of the September agreement, including a report that it was “on ice,” while @SamAltman and @JensenHuang have publicly played down rift concerns. OpenAI is also in accelerated fundraising talks with other investors, with the total round previously reported by CNBC as potentially around $100 billion and possibly closing in two parts starting with strategics such as @Amazon, @Microsoft, and Nvidia.

27. NASA chief blasts Boeing, space agency for failed Starliner astronaut mission

@Jared Isaacman blamed both #Boeing and #NASA for mishandling the first crewed test flight of the #Starliner spacecraft, saying the most serious problems were leadership and decision making, not just hardware. A 311-page report recounts how the June 2024 mission launched @Butch Wilmore and @Suni Williams successfully, but multiple thrusters failed as Starliner approached the #InternationalSpaceStation, complicating steering and docking, and NASA and Boeing ultimately returned Starliner to Earth uncrewed while the astronauts came home nine months later on #SpaceX #CrewDragon. Isaacman said the incident was classified as a #TypeAMishap, a top category used for events like #Challenger, #Columbia, and #Apollo1, though this mission preserved crew safety, and he cited unresolved thruster root-cause work alongside design and engineering deficiencies that must be corrected. The report also describes eroded trust and overly risk-tolerant leadership across both organizations, with more than 30 launch attempts creating schedule pressure and decision fatigue, and crew return debates devolving into unprofessional conduct. A Florida Institute of Technology aerospace leader said such organizational failures can outweigh technical ones, and Isaacman’s rare public scolding signals a shift in how NASA may conduct business while he promises leadership accountability without detailing specifics.

That’s all for today’s digest for 2026/02/20! We picked, and processed 27 Articles. Stay tuned for tomorrow’s collection of insights and discoveries.

Thanks, Patricia Zougheib and Dr Badawi, for curating the links

See you in the next one! 🚀